The original post has 58k views, the correct information has 2k. That is the level of efficiency that site has in disseminating correct information.

People Twitter

People tweeting stuff. We allow tweets from anyone.

RULES:

- Mark NSFW content.

- No doxxing people.

- Must be a pic of the tweet or similar. No direct links to the tweet.

- No bullying or international politcs

- Be excellent to each other.

- Provide an archived link to the tweet (or similar) being shown if it's a major figure or a politician. Archive.is the best way.

The timestamps imply the correct information is less than an hour old in that screenshot

a lie can travel halfway around the world while the truth is putting on its shoes

America does not care about truth. Worse, it actively discourages truth.

Lying is accepted. Making up facts that suit your narrative and shouting down anyone who disagrees gets you rewarded with the highest position of state.

We, the rest of the world, are just watching you guys in horror.

America does not care about truth

Show me a country with social media and I'll show you people who value making their political opponents 'look like fools' by lying.

Almost like that people don't care about facts everywhere on the planet.

It's not like there's a state border check for vehicle emissions. You can visit California in a non-California car without an emissions check. Joy's claim doesn't make sense even before fact checking.

There is a border check entering CA but they only ask about fruits and vegetables.

Wow it's true: https://en.wikipedia.org/wiki/California_Border_Protection_Stations

I don't think any other US state has checkpoints. This is a surprise to me.

California grows like 40% of the vegetables for the entire country. So they're very protective of their agriculture.

Florida has a checkpoint for plants and possible invasive species, definitely not for firetrucks helping people though.

Imagine my surprise learning about them by pulling up to one. I don't think it was even near the CA/OR, but like 50 miles into Cali on the interstate. All of a sudden I see an officer flagging me at a little booth, I figured it was an emergency ahead or maybe I ended up on a toll road? The first thing he says to me was something along the lines of 'Got any fruit or vegetables in the vehicle?' And I just respond 'I'm sorry, what?' He must get that a lot, he pretty much waved me through as he was explaining.

I brought a prepackaged fruit cup my next trip just to see what would happen but I ended up doing the coastal route and never got stopped going that way.

It's meant to sow discord, not be fact checked or taken seriously by critically-minded people. It's meant to rile up the MAGA's.

Fascist agitprop

This is a great example of the importance of teaching people critical thinking skills.

If you stop to analyze what was written in this sensationalized post rather than acting on your emotions, the first question that should come into mind is "who stopped them?". There are no checkpoints between Oregon and California where cars are turned away from crossing state lines due to emissions. In fact, that would probably violate federal free travel laws which would supercede any stupid law like that.

Next, consider the source. Is this person trustworthy? Did they provide ample citations to reputable journalistic outlets that verified the factuality of the claims? If not, they may be trying to deceive you with falsehoods or have an ulterior motive for misrepresenting the facts. At best they are repeating claims that they've heard from others and anything they report on should be taken with a grain of salt.

This post doesn't hold up to the slightest amount of scrutiny, but people get fooled every day by crap like this. My advice is that if you hear something that sounds outrageous or too good to be true, stop and think carefully about it for a few minutes, or maybe just wait for another source to report it. Saves you a lot of stress and protects you from endlessly doomscrolling.

The firetrucks were allowed through but Haitian immigrants ate them!

You expect too much from these people, and an increasing share of them aren't genuine people at all.

A friend of mine posted something on Facebook (reposted from a local list in Arizona) claiming that two people were knocking on doors and beating up people, and one woman was in the hospital as a result. Pictures of the perpetrators and everything.

Something felt off, so I did a quick search on their names. And I found an article from some city in Texas where the same rumor had been circulating about that area. The article clarified that the two people had committed some crimes several years ago and were caught, tried, and convicted already.

So someone took one of these, changed the name of the area, and posted it to the local list. Why do people do this? A form of stochastic terrorism maybe?

Edit - I can't find the post (I think my friend deleted it), but I did still have a tab open with the article about it.

I have noticed this as well. Back during the early facebook years, a "news" outlet would just generate content by posting stories like "Greenville ranked as the city with highest crime rate" every week or so.

Everybody on my facebook feed would go crazy saying things like "I always knew that town was dangerous, but to hear it outranks New York? TERRIFYING!"

Only issue is that this outlet would have 50 different URLs for the article, all giving a city in a different state (always an immigrant heavy suburb) and those articles would be appear around facebook and nobody would know the wiser.

It costs almost nothing for them to rewrite the world and your perspective about it. The granularity they are now capable of should give us pause.

Where I live I mostly see this done to support the narrative that immigrants are bad, that you can't trust traditional media, and that you should be voting for the local fascist party.

Afaik the place names and date are not changed here, I suspect because that would make the perpetrators criminally liable. Instead they work by omission: take a many years old crime, don't mention the date but instead post it on facebook as if it only recently happened, in that post question why the media isn't mentioning this event or how the non-fascist politicians could let things get this much out of control, and then boost the post with help from others in the telegram group so that it reaches a wider audience.

“who stopped them?”. There are no checkpoints between Oregon and California

.. actually, there are checkpoints along every major roadway into California that do check incoming vehicles, mostly to find and prevent invasive species from entering the state and affecting the agricultural industry, and more broadly to protect the environmental systems in California. There have, in fact, been legal challenges against these checkpoints for violating travel laws, but the checkpoints have remained. They've also been used to seize non-agricultural items like weed, weapons smuggling, etc. so emissions standards checking isn't completely out of the realm.

Obviously, she's lying and she doesn't know any of this either anyway. But there are checkpoints to enter California.

https://en.wikipedia.org/wiki/California_Border_Protection_Stations

I can't speak for all of them, but the I5 border station near the Oregon border hasn't even been manned in several years. This gal is so full of shit.

This is not "false" this is just "pants on fire lying"

This person should not have the freedom to speak publicly anymore

Screw freedom of expression as we currently have it. Freedom of expression should become a right with responsibility. if you can't be responsible, you can't have the right. You either speak the truth or don't get to speak to groups, period

I'm SO done with literally everyone and everything lying with zero repercussions. Companies lie, all fine. Politicians lie like there is no tomorrow and that's fiiiiine, even Obama lied like 25% and that's fiiiiine, trump literally lies 99% of the time and it's all fine! Nothing happens, so we just lie more and more and you can't trust anything or anyone anymore

Freedom of expression on the internet was a big mistake

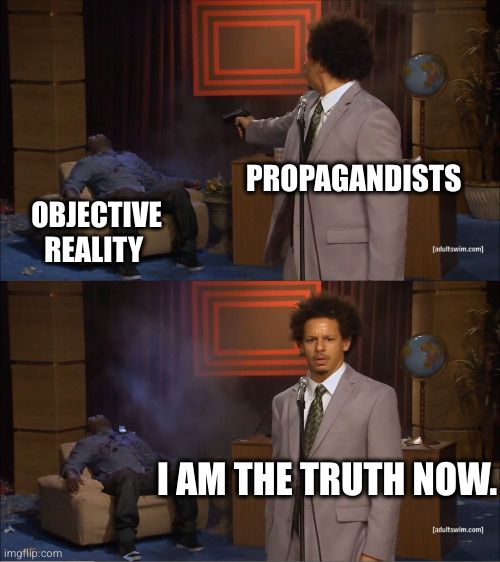

It's an tragic fact that correcting a lie is hugely more costly than making and spreading the lie.

That basically sums up all of far right talking points

This stupid bitch has since deleted the tweet and gotten back to it like it's another Tuesday. OSFM had to put out a statement correcting it. I hate people.

she deleted the original tweet, then reposted it again.

My bad, you're right. I tried to check first, but I didn't have the patience to scroll past her claiming America Is So Back because "Starbucks kicked homeless people out of bathrooms," so I ended up not seeing the reposted tweet.

She lies, runs away from OSFM, gets community noted, and still, in the replies:

Um, this is 100% TRUE and WOKE Oregon seems to be covering for WOKE California. Leftists stick together.

There are many people whose work and ideas I deeply respect, but even in my weakest moments, I couldn't possibly idolize them like this useless waste of oxygen does gargling on Trump's ballsack every day.

Because I'm a horrible person, I can foresee that there's zero chance I'll die before doing something monumentally stupid. My greatest shame is realizing it probably won't be as effective as what Luigi did.

I wish leftists stuck together as much as the right thinks we do.

Right wingers haven't cared about the truth for decades. It's just now they don't care whether we know it or not. Just think back to Trump's first term and "alternate truths" and all that. They don't care anymore. They believe what they want to believe, and no amount of facts or reasoning is going to change it.

But yes, everyone lies. Some people just more than others and some will lie to your face with a smirk on their face knowing damn well you know they're lying.

You know how cheaters ruin online games? How their fun relies on fucking everyone, abusing the system and winning without respecting the rules? The same applies to people like her, trump, musk, etc. They're "communication cheaters", they don't respect the rules, they abuse the system, yet platforms want us to believe that they deserve to be treated like common users.

"Waah waah mah 🧊 🍑 waah waah censorship" - Fuck you. If free speech was a game, these people would be banned.

Why would known diarrhea fetishist Mila Joy say blatant lies on the internet?

Bot? How do we know that isn't just another of Elon's alts?

I suppose somebody could check if she's called him a good father recently...

Too late. It's already become true, and another example of wokeness for your racist uncle to bang on about.

This is exactly why so many things just feel hopeless to me. The worst people have caught on to, and the parrots don't even realize, that you can just say literally anything and the occupants of your echo chamber will believe it immediately and then refuse to believe whatever reality actually is if it is presented to them by literally any other "outsider."

Hell even someone of the "in-group" who is not substantial enough will be outed as an "other" if they dare go against the initial message.

I like the idea that someone mid fighting the fires, put down thier hose to reposnd to the tweet

The office of the state fire marshal definitely did not put down a fire hose. Or get up from their desk. (or did I get wooshed?)

how do we even come back from this

The French had a similar issue in the tail end of the 1700's, perhaps we could draw inspiration from their work.

At the risk of being called an accelerationist, the fact of the matter is that the only way out is through.

AI is going to make misinformation a 100 times worse. There really needs to be laws with bot usage on social media and data privacy laws.

2100 retweets on the lie.

33 on the truth.

This is something AI would be good for

Have it search for specific misinformation and reply with a canned response with sources using an official account.

Anyone politicizing the fires from the comfort of a place that isn’t burning to the ground, should immediately shut the fuck up.