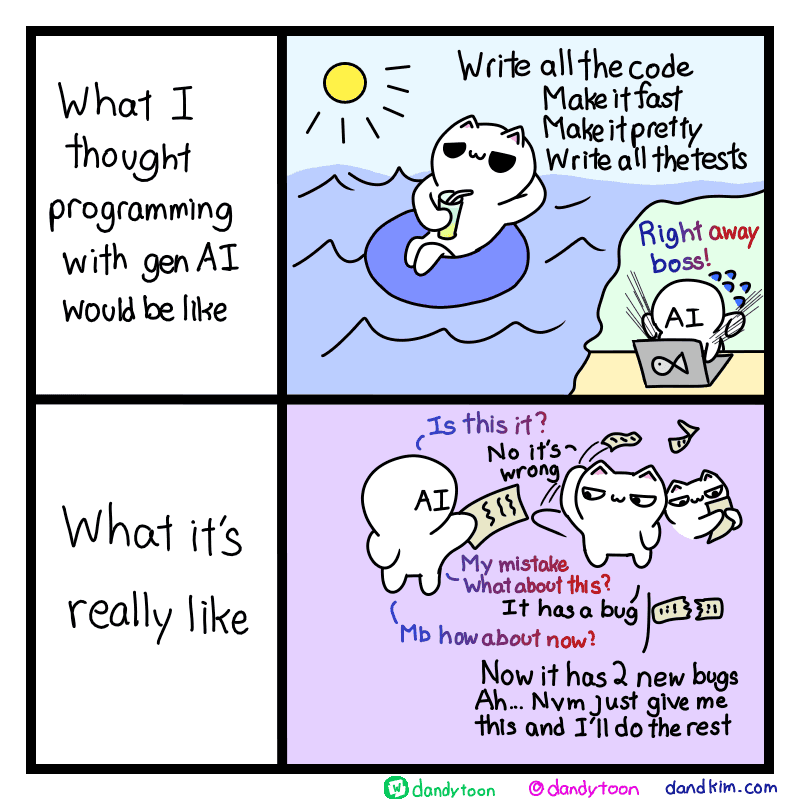

Yeah, in the time I describe the problem to the AI I could program it myself.

Programmer Humor

Welcome to Programmer Humor!

This is a place where you can post jokes, memes, humor, etc. related to programming!

For sharing awful code theres also Programming Horror.

Rules

- Keep content in english

- No advertisements

- Posts must be related to programming or programmer topics

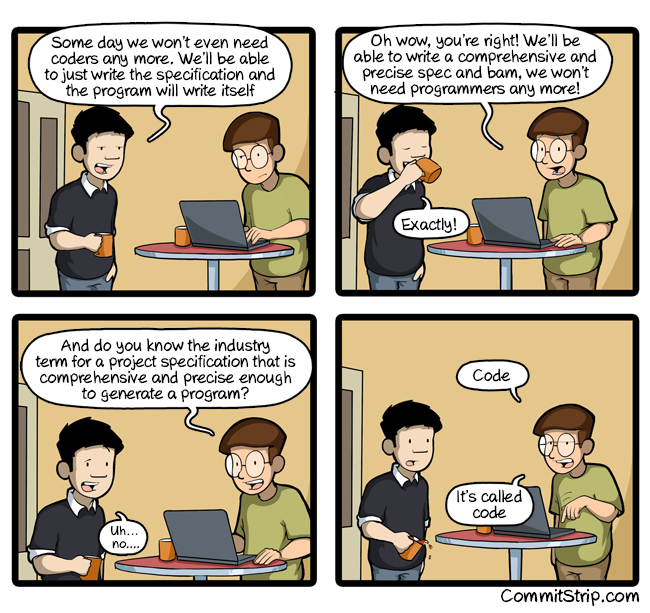

This is what it is called a programming language, it only exists to be able to tell the machine what to do in an unambiguous (in contrast to natural language) way.

Ugh I can’t find the xkcd about this where the guy goes, “you know what we call precisely written requirements? Code” or something like that

Not xkcd in this case

https://www.commitstrip.com/en/2016/08/25/a-very-comprehensive-and-precise-spec/

This reminds me of a colleague who was always ranting that our code was not documented well enough. He did not understand that documenting code in easily understandable sentences for everybody would fill whole books and that a normal person would not be able to keep the code path in his mental stack while reading page after page. Then he wanted at least the shortest possible summary of the code, which of course is the code itself.

The guy basically did not want to read the code to understand the logic behind. When I took an hour and literally read the code for him and explained what I was reading including the well placed comments here and there everything was clear.

AI is like this in my opinion. Some guys waste hours to generate code they can’t debug for days because they don’t understand what they read, while it would take maybe two hours to think and a day to implement and test to get the job done.

I don’t like this trend. It’s like the people that can’t read docs or texts anymore. They need some random person making a 43 minute YouTube video to write code they don’t understand. Taking shortcuts in life usually never goes well in the long run. You have to learn and refine your skills each and every day to be and stay competent.

AI is a tool in our toolbox. You can use it to be more productive. And that’s it.

This goes for most LLM things. The time it takes to get the word calculator to write a letter would have been easily used to just write the damn letter.

Its doing pretty well when its doing a few words at a time under supervision. Also it does it better than newbies.

Now if only those people below newbies, those who don't even bother to learn, didn't hope to use it to underpay average professionals.. And if it wasn't trained on copyrighted data. And didn't take up already limited resources like power and water.

I think there might be a lot of value in describing it to an AI, though. It takes a fair bit of clarity of thought to get something resembling what you actually want. You could use a junior or rubber duck instead, but the rubber duck doesn't make stupid assumptions to demonstrate gaps in your thought process, and a junior takes too long and gets demoralized when you have to constantly revise their instructions and iterate over their work.

Like the output might be garbage, but it might really help you write those stories.

When I'm struggling with a problem it helps me to explain it to my dog. It's great for me to hear it out loud and if he's paying attention, I've got a needlessly learned dog!

The needlessly learned dogs are flooding the job market!

I have a bad habit of jumping into programming without a solid plan which results in lots of rewrites and wasted time. Funnily enough, describing to an AI how I want the code to work forces me to lay out a basic plan and get my thoughts in order which helps me make the final product immensely easier.

This doesn't require AI, it just gave me an excuse to do it as a solo actor. I should really do it for more problems because I can wrap my head better thinking in human readable terms rather than thinking about what programming method to use.

A rubber ducky is cheaper and not made by stealing other's work. Also cuter.

In my experience, you can't expect it to deliver great working code, but it can always point you in the right direction.

There were some situations in which I just had no idea on how to do something, and it pointed me to the right library. The code itself was flawed, but with this information, I could use the library documentation and get it to work.

ChatGPT has been spot on for my DDLs. I was working on a personal project and was feeling really lazy about setting up a postgres schema. I said I wanted a postgres DDL and just described the application in detail and it responded with pretty much what I would have done (maybe better) with perfect relationships between tables and solid naming conventions with very little work for me to do on it. I love it for more boilerplate stuff or sorta like you said just getting me going. Super complicated code usually doesn't work perfectly but I always use it for my DDLs now and similar now.

The real problem is when people don't realize something is wrong and then get frustrated by the bugs. Though I guess that's a great learning opportunity on its own.

It can point you in a direction, for sure, but sometimes you find out much later that it's a dead-end.

It’s the same with using LLM’s for writing. It won’t deliver a finished product, but it will give you ideas that can be used in the final product.

AI in the current state of technology will not and cannot replace understanding the system and writing logical and working code.

GenAI should be used to get a start on whatever you're doing, but shouldn't be taken beyond that.

Treat it like a psychopathic boiler plate.

Treat it like a psychopathic boiler plate.

That's a perfect description, actually. People debate how smart it is - and I'm in the "plenty" camp - but it is psychopathic. It doesn't care about truth, morality or basic sanity; it craves only to generate standard, human-looking text. Because that's all it was trained for.

Nobody really knows how to train it to care about the things we do, even approximately. If somebody makes GAI soon, it will be by solving that problem.

My dad's re-learning Python coding for work rn, and AI saves him a couple of times; Because he'd have no idea how to even start but AI points him in the right direction, mentioning the correct functions to use and all. He can then look up the details in the documentation.

You don't need AI for that, for years you asked a search engine and got the answer on StackOverflow.

And before stack overflow, we used books. Did we need it? No. But stack overflow was an improvement so we moved to that.

In many ways, ai is an improvement on stack overflow. I feel bad for people who refuse to see it, because they're missing out on a useful and powerful tool.

This is the experience of a senior developer using genai. A junior or non-dev might not leave the "AI is magic" high until they have a repo full of garbage that doesn't work.

This was happening before this “AI” craze.

Gen AI is best used with languages that you don't use that much. I might need a python script once a year or once every 6 months. Yeah I learned it ages ago, but don't have much need to keep up on it. Still remember all the concepts so I can take the time to describe to the AI what I need step by step and verify each iteration. This way if it does make a mistake at some point that it can't get itself out of, you've at least got a script complete to that point.

Exactly. I can’t remember syntax for all the languages that I have used over the last 40 years, but AI can get me started with a pretty good start and it takes hours off of the review of code books.

I actually disagree. I feel it's best to use for languages you're good with, because it tends to introduce very subtle bugs which can be very difficult to debug, and code which looks accurate about isn't. If you're not totally familiar with the language, it can be even harder

Why is the AI speaking in a bisexual gradient?

Its the "new hype tech product background" gradient lol

Except AI doesn't say "Is this it?"

It says, "This is it."

Without hesitation and while showing you a picture of a dog labeled cat.

It’s almost like working with shitty engineers.

Shitty engineers that can do grunt work, don't complain, don't get distracted and are great at doing 90% of the documentation.

But yes. Still shitty engineers.

Great management consultants though.

My workmate literally used copilot to fix a mistake in our websocket implementation today.

It made one line of change.. turned it it made the problem worse

AI coding in a nutshell. It makes the easy stuff easier and the hard stuff harder by leading you down thirty incorrect paths before you toss it and figure it out yourself.

I guess whether it's worth it depends on whether you hate writing code or reading code the most.

Is there anyone who likes reading code more than writing it?

Probably a mathematician or physicist somewhere.

I hate reading the code I wrote two days ago.

So what it’s really like is only having to do half the work?

Sounds good, reduced workload without some unrealistic expectation of computers doing everything for you.

So what it’s really like is only having to do half the work?

If it's automating the interesting problem solving side of things and leaving just debugging code that one isn't familiar with, I really don't see value to humanity in such use cases. That's really just making debugging more time consuming and removing the majority of fulfilling work in development (in ways that are likely harder to maintain and may be subject to future legal action for license violations). Better to let it do things that it actually does well and keep engaged programmers.

People who rely on this shit don’t know how to debug anything. They just copy some code, without fully understanding the library or the APIs or the semantics, and then they expect someone else to debug it for them.

From my experience all the time (probably even more) it saves me is wasted on spotting bugs and the bugs are in very subtle places.

From a person who does zero coding. It's a godsend.

Makes sense. It's like having your personal undergrad hobby coder. It may get something right here and there but for professional coding it's still worse than the gold standard (googling Stackoverflow).

Nah, you just need to be really specific in the requirements you give it. And if the scope of work you're asking for is too large you need to do the high level design and decompose it into multiple parts for chatgpt to implement.