Pretty sure Valve has already realized the correct way to be a tech monopoly is to provide a good user experience.

Technology

This is a most excellent place for technology news and articles.

Our Rules

- Follow the lemmy.world rules.

- Only tech related news or articles.

- Be excellent to each other!

- Mod approved content bots can post up to 10 articles per day.

- Threads asking for personal tech support may be deleted.

- Politics threads may be removed.

- No memes allowed as posts, OK to post as comments.

- Only approved bots from the list below, this includes using AI responses and summaries. To ask if your bot can be added please contact a mod.

- Check for duplicates before posting, duplicates may be removed

- Accounts 7 days and younger will have their posts automatically removed.

Approved Bots

Idk, I kind of disagree with some of their updates at least in the UI department.

They treat customers well, though.

Yeah. Steam and I are getting older. Would be nice to adjust simple things like text size in the tool.

Also that ‘Live’ shit bothers me. Live means live. Not ‘was recorded live, and now presented perpetually as LIVE’

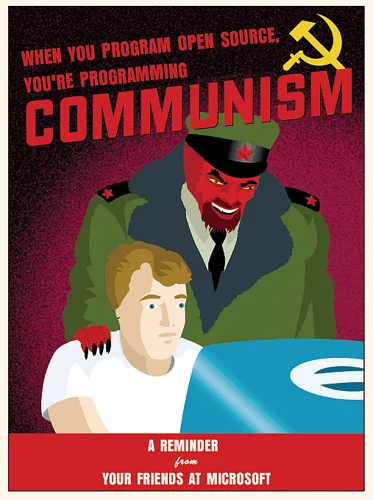

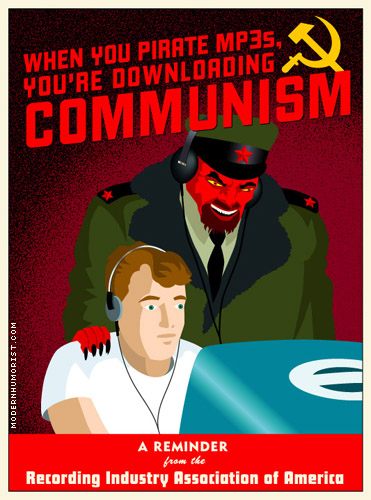

Time to dust off this old chestnut

I remember this being some sort of Apple meme at some point. Hence the gum drop iMac.

I think the inspiration is from Modern Humorist (note: there's no HTTPS)

Edit: here's the image in question:

Personally, I think Microsoft open sourcing .NET was the first clue open source won.

Wall Street’s panic over DeepSeek is peak clown logic—like watching a room full of goldfish debate quantum physics. Closed ecosystems crumble because they’re built on the delusion that scarcity breeds value, while open source turns scarcity into oxygen. Every dollar spent hoarding GPUs for proprietary models is a dollar wasted on reinventing wheels that the community already gave away for free.

The Docker parallel is obvious to anyone who remembers when virtualization stopped being a luxury and became a utility. DeepSeek didn’t “disrupt” anything—it just reminded us that innovation isn’t about who owns the biggest sandbox, but who lets kids build castles without charging admission.

Governments and corporations keep playing chess with AI like it’s a Cold War relic, but the board’s already on fire. Open source isn’t a strategy—it’s gravity. You don’t negotiate with gravity. You adapt or splat.

Cheap reasoning models won’t kill demand for compute. They’ll turn AI into plumbing. And when’s the last time you heard someone argue over who owns the best pipe?

Governments and corporations still use the same playbooks because they're still oversaturated with Boomers who haven't learned a lick since 1987.

DeepSeek shook the AI world because it’s cheaper, not because it’s open source.

And it’s not really open source either. Sure, the weights are open, but the training materials aren’t. Good luck looking at the weights and figuring things out.

I think it's both. OpenAI was valued at a certain point because of a perceived moat of training costs. The cheapness killed the myth, but open sourcing it was the coup de grace as they couldn't use the courts to put the genie back into the bottle.

True, but they also released a paper that detailed their training methods. Is the paper sufficiently detailed such that others could reproduce those methods? Beats me.

I would say that in comparison to the standards used for top ML conferences, the paper is relatively light on the details. But nonetheless some folks have been able to reimplement portions of their techniques.

ML in general has a reproducibility crisis. Lots of papers are extremely hard to reproduce, even if they're open source, since the optimization process is partly random (ordering of batches, augmentations, nondeterminism in GPUs etc.), and unfortunately even with seeding, the randomness is not guaranteed to be consistent across platforms.

I hate to disagree but IIRC deepseek is not a open-source model but open-weight?

It's tricky. There is code involved, and the code is open source. There is a neural net involved, and it is released as open weights. The part that is not available is the "input" that went into the training. This seems to be a common way in which models are released as both "open source" and "open weights", but you wouldn't necessarily be able to replicate the outcome with $5M or whatever it takes to train the foundation model, since you'd have to guess about what they used as their input training corpus.

I view it as the source code of the model is the training data. The code supplied is a bespoke compiler for it, which emits a binary blob (the weights). A compiler is written in code too, just like any other program. So what they released is the equivalent of the compiler's source code, and the binary blob that it output when fed the training data (source code) which they did NOT release.

Not exactly sure of what "dominating" a market means, but the title is on a good point: innovation requires much more cooperation than competition. And the 'AI race' between nations is an antiquated mainframe pushed by media.

Didnt it turn out that they used 10000 nvidia cards that had the 100er Chips, and the "low level success" and "low cost" is a lie?

also they aren't actually open source? Only the weights are open source?

I'm not too informed about DeepSeek. Is it real open-source, or fake open-source?

It’s semi-open, not fully open source as what is typically thought of.

Deepseek is the company, R1 is an MIT-licensed produce, they have the Qwen models under Apache license.

You can download, modify, run locally. There are many copies online out of Deepseek's control.