I'll need the full peer-reviewed paper which, based on this article, is still pending? Until then, based on this blog post, here's my thoughts as someone who's education adjacent but scientifically trained.

Most critically, they do not state what the control arm was. Was it a passive control? If so, of course you'll see benefits in a group engaged in AI tutoring compared to doing nothing at all. How does AI tutoring compare to human tutoring?

Also important, they were assessing three areas - English learning, Digital Skills, and AI Knowledge. Intuitively, I can see how a language model can help with English. I'm very, very skeptical of what they define as those last two domains of knowledge. I can think of what a standardized English test looks like, but I don't know what they were assessing for the latter two domains. Are digital skills their computer or typing proficiency? Then obviously you'll see a benefit after kids spend 6 weeks typing. And "AI Knowledge"??

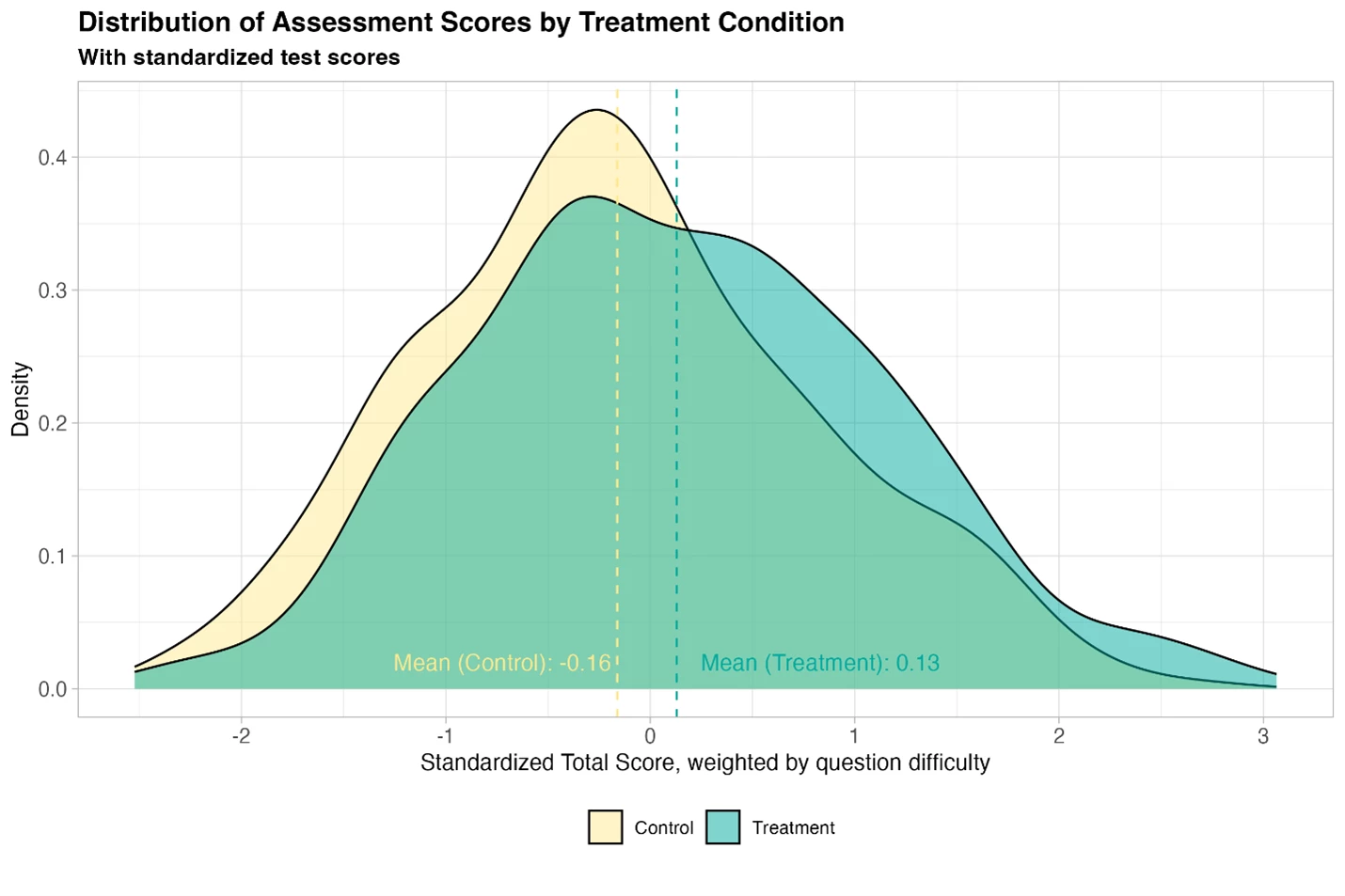

ETA: They also show a figure of test scores across both groups. It appears to be a composite score across the three domains. How do we know this "AI Knowledge" or Digital Skills domain was not driving the effect?

ETA2: I need to see more evidence before I can support their headline claim of huge growth equal to two years of learning. Quote from article: "When we compared these results to a database of education interventions studied through randomized controlled trials in the developing world, our program outperformed 80% of them, including some of the most cost-effective strategies like structured pedagogy and teaching at the right level." Did they compare the same domains of growth, ie English instruction to their English assessment? Or are they comparing "AI Knowledge" to some other domain of knowledge?

But, they show figures demonstrating improvement in scores testing these three areas, a correlation between tutoring sessions and test performance, and claim to see benefits in everyday academic performance. That's encouraging. I want to see overall retention down the line - do students in the treatment arm show improved performance a year from now? At graduation? But I know this data will take time to collect.