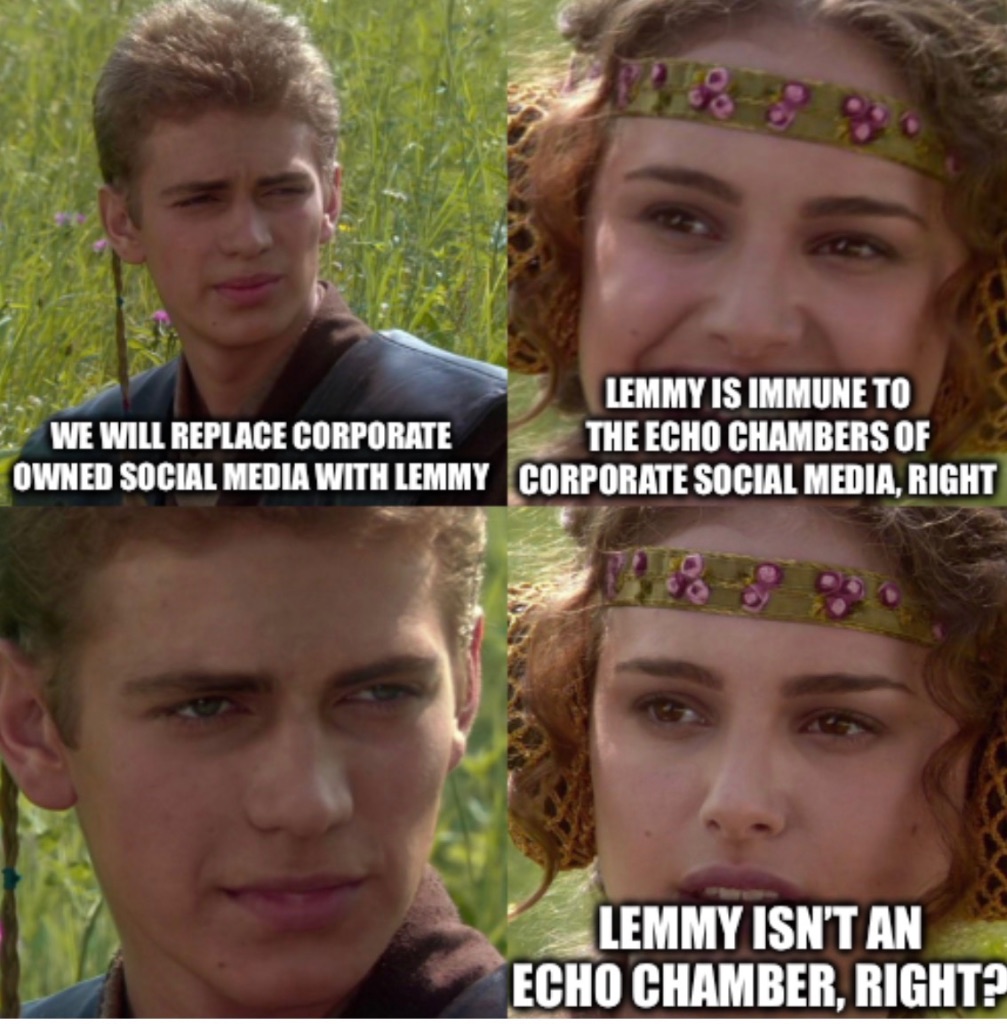

“Fixing” social media is like “fixing” capitalism. Any manmade system can be changed, destroyed, or rebuilt. It’s not an impossible task but will require a fundamental shift in the way we see/talk to/value each other as people.

The one thing I know for sure is that social media won’t ever improve if we all accept the narrative that it can’t be improved.

We live in capitalism. Its power seems inescapable. So did the divine right of kings. Any human power can be resisted and changed by human beings. Resistance and change often begin in art, and very often in our art, the art of words.

-Ursula K Le Guin