this post was submitted on 21 Jan 2024

1348 points (99.4% liked)

Programmer Humor

27289 readers

1528 users here now

Welcome to Programmer Humor!

This is a place where you can post jokes, memes, humor, etc. related to programming!

For sharing awful code theres also Programming Horror.

Rules

- Keep content in english

- No advertisements

- Posts must be related to programming or programmer topics

founded 2 years ago

MODERATORS

you are viewing a single comment's thread

view the rest of the comments

view the rest of the comments

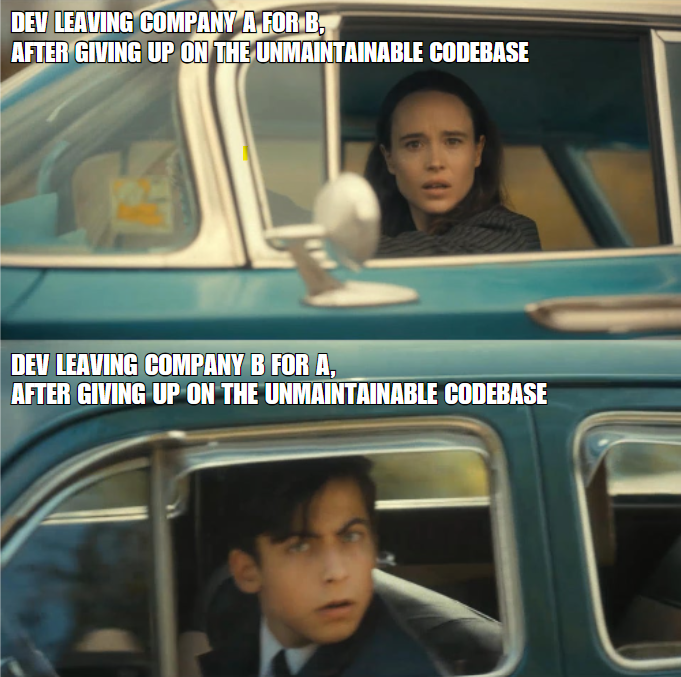

Project A: Has 6 different implementations of the same complex business logic.

Project B: Has one implementation of the complex business logic... But it's ALL in one function with 17 arguments and 1288 lines of code.

"The toast always lands the buttered side down."

Only 1288 lines? Can I raise you a 6000+ lines stored procedure that calls to multiple different sql functions that each implements a slightly different variation of the same logic?

Are there triggers in the sql database? It's too easy otherwise

There are on delete triggers to fix circular dependencies when deleting rows, triggers on update and triggers on row creation!

Feels like my old job.

Project B is just called neural network

Actually, I bet you could implement that in less. You should be able to legibly get several weights in one line.

You have my interest! (Mainly because I don't know the first thing about implementing neutral networks)

At the simplest, it takes in a vector of floating-point numbers, multiplies them with other similar vectors (the "weights"), sums each one, applies a RELU* the the result, and then uses those values as a vector for another layer with it's own weights (or gives output). The magic is in the weights.

This operation is a simple matrix-by-vector product followed by pairwise RELU, if you know what that means.

In Haskell, something like:

layer layerInput layerWeights = map relu $ map sum $ map (zipWith (*) layerInput) layerWeightsfoldl layer modelInput modelWeightsWhere

modelWeightsis [[[Float]]], and so layer has type [Float] -> [[Float]] -> [Float].* RELU:

if i>0 then i else 0. It could also be another nonlinear function, but RELU is obviously fast and works about as well as anything else. There's interesting theoretical work on certain really weird functions, though.Less simple, it might have a set pattern of zero weights which can be ignored, allowing fast implementation with a bunch of smaller vectors, or have pairwise multiplication steps, like in the Transformer. Aaand that's about it, all the rest is stuff that was figured out by trail and error like encoding, and the math behind how to train the weights. Now you know.

Assuming you use hex values for 32-bit weights, you could write a line with 4 no problem:

wgt35 = [0x1234FCAB, 0x1234FCAB, 0x1234FCAB, 0x1234FCAB];And, you can sometimes get away with half-precision floats.

That's cool, though honestly I haven't fully understood, but that's probably because I don't know Haskell, that line looked like complete gibberish to me lol. At least I think I got the gist of things on a high level, I'm always curious to understand but never dare to dive deep (holds self from making deep learning joke). Much appriciated btw!

Yeah, maybe somebody can translate for you. I considered using something else, but it was already long and I didn't feel like writing out multiple loops.

No worries. It's neat how much such a comparatively simple concept can do, with enough data to work from. Circa-2010 I thought it would never work, lol.

Ikr! It still sounds so incredible to me