Just disabled it.

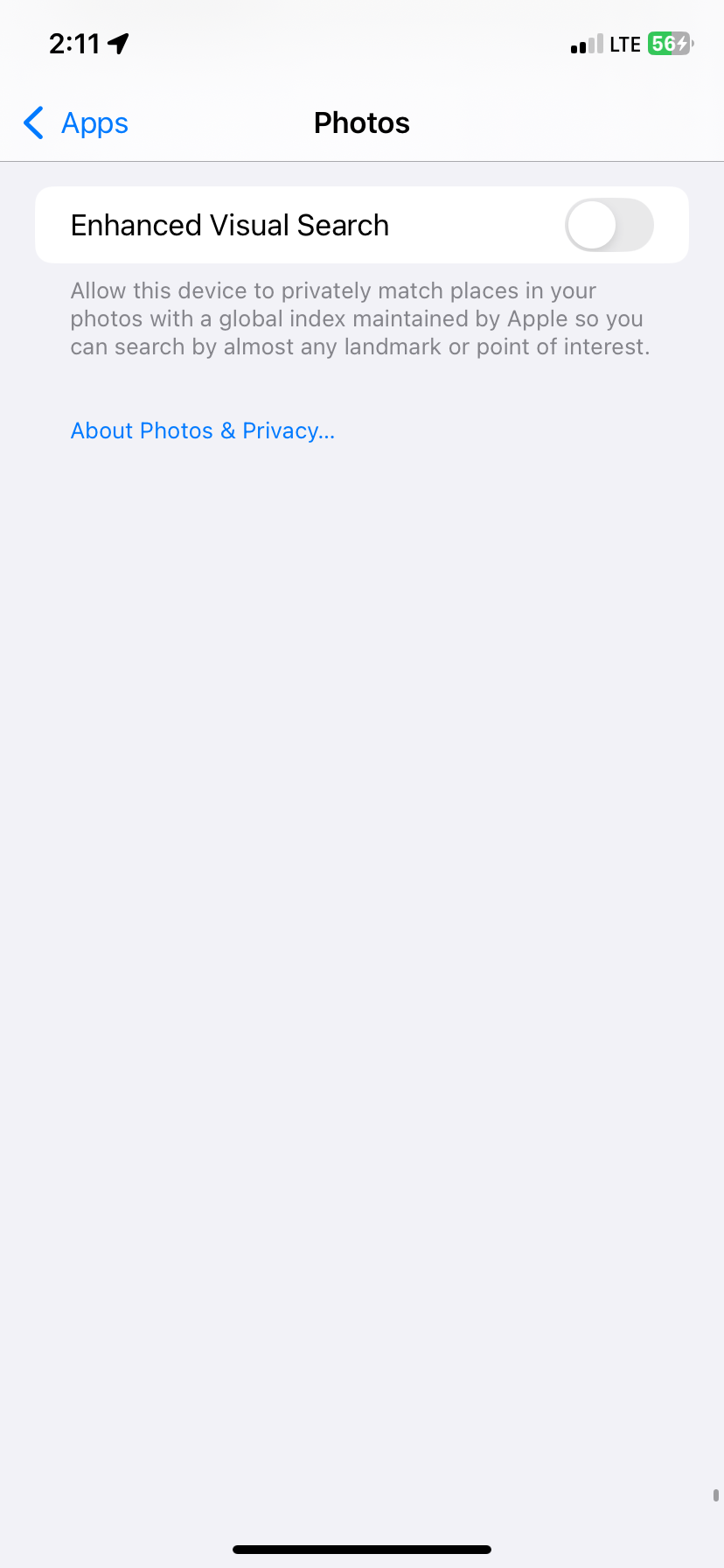

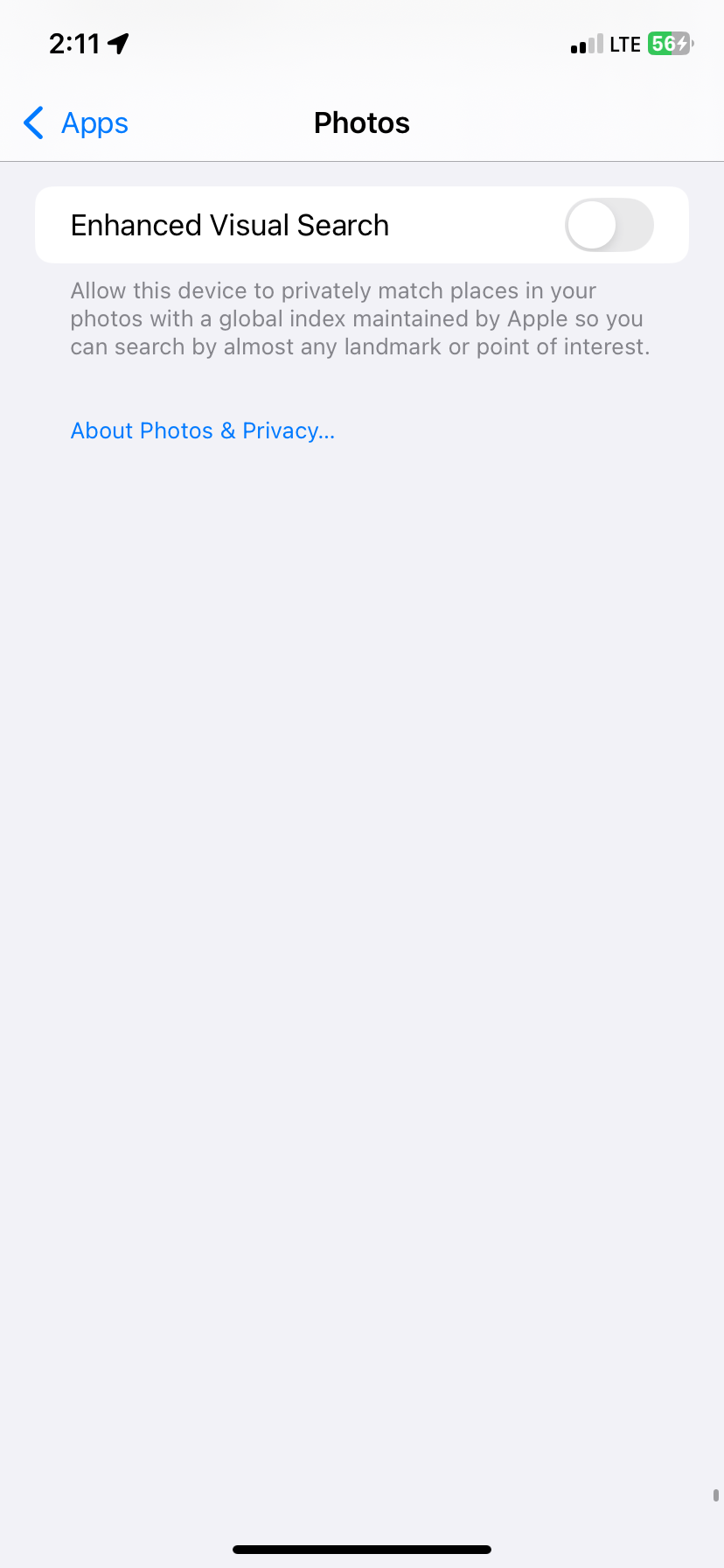

Settings - apps - photos - scroll all the way down.

A community for Lemmy users interested in privacy

Rules:

Just disabled it.

Settings - apps - photos - scroll all the way down.

Being able to partially opting out of a privacy nightmare still leaves a lot to desire. 😔

I want anything AI-related to be forced to be opt-in. I’m sick of having to find out these things on the news/Lemmy/wherever when it is likely too late to have them use the data already

These days some settings are allowed to be disabled, but do you think this is really an improvement, yes you'll not see the "enhanced search" results but how do you that know that apple is not going to analyse photos the same way?

MVP

Better still, don't update

so what happpens on your phone, doesn't stay on your phone ? 😐

Hasn't been like that for years unless you're running a custom setup. The default is no privacy.

It’s done on device, for the most part.

Apple did explain the technology in a technical paper published on October 24, 2024, around the time that Enhanced Visual Search is believed to have debuted. A local machine-learning model analyzes photos to look for a "region of interest" that may depict a landmark. If the AI model finds a likely match, it calculates a vector embedding – an array of numbers – representing that portion of the image.

The device then uses homomorphic encryption to scramble the embedding in such a way that it can be run through carefully designed algorithms that produce an equally encrypted output. The goal here being that the encrypted data can be sent to a remote system to analyze without whoever is operating that system from knowing the contents of that data; they just have the ability to perform computations on it, the result of which remain encrypted. The input and output are end-to-end encrypted, and not decrypted during the mathematical operations, or so it's claimed.

The dimension and precision of the embedding is adjusted to reduce the high computational demands for this homomorphic encryption (presumably at the cost of labeling accuracy) "to meet the latency and cost requirements of large-scale production services." That is to say Apple wants to minimize its cloud compute cost and mobile device resource usage for this free feature.

October 24, 2024, around the time that Enhanced Visual Search is believed to have debuted.

I love it when surprise features get silently added, then discovered a couple months later. Makes you wonder exactly how easy it would be for Apple to start scanning for tons of other stuff in addition to landmarks, now that they've built out the infrastructure for it.

It's really kind of them, too. Collecting data for all of your photos for free, holding a database of public places for free, scanning your photos against them for free, returning that day to you for free... They're so generous!

I love big brother