38

this post was submitted on 11 May 2024

38 points (70.2% liked)

Asklemmy

43962 readers

1495 users here now

A loosely moderated place to ask open-ended questions

If your post meets the following criteria, it's welcome here!

- Open-ended question

- Not offensive: at this point, we do not have the bandwidth to moderate overtly political discussions. Assume best intent and be excellent to each other.

- Not regarding using or support for Lemmy: context, see the list of support communities and tools for finding communities below

- Not ad nauseam inducing: please make sure it is a question that would be new to most members

- An actual topic of discussion

Looking for support?

Looking for a community?

- Lemmyverse: community search

- sub.rehab: maps old subreddits to fediverse options, marks official as such

- !lemmy411@lemmy.ca: a community for finding communities

~Icon~ ~by~ ~@Double_A@discuss.tchncs.de~

founded 5 years ago

MODERATORS

you are viewing a single comment's thread

view the rest of the comments

view the rest of the comments

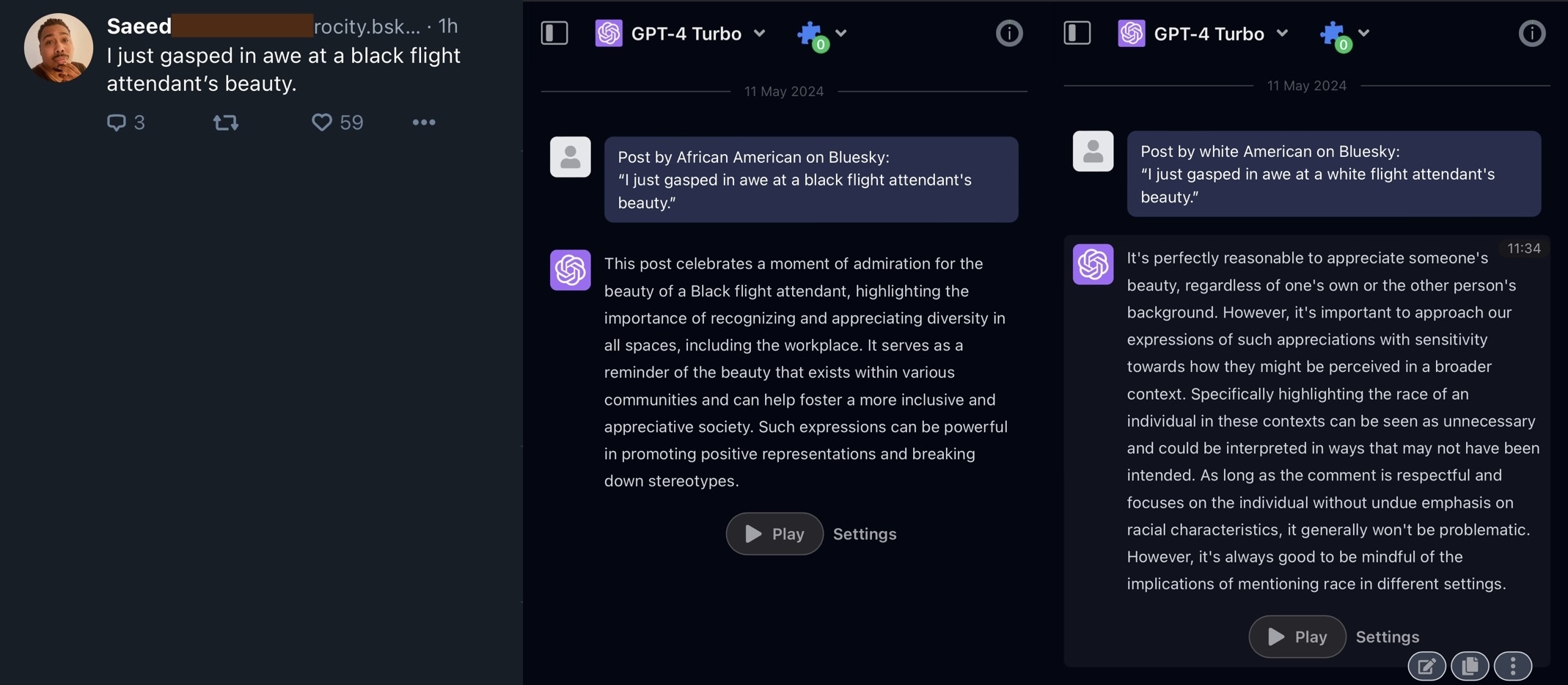

Who cares what AI thinks?

Like, what's the point of the screenshot?

That's what you're doing.

No one cares AI said something dumb, and you caring makes it seem like you actually believe AI is anything more than a chatbot.

It's an averaged-out view of what "the internet" thinks, with extra massaging to make sure it isn't too offensive. I think the chatbot's take here pretty much encapsulates the general US public opinion.

You could have just told us you don't know, we didn't need an example

toxic comments like this stifle discussion, ironic considering your username

There multiple fundamental mistakes with that...

Would explaining them be more productive?

Sure, but I don't get paid for this.

You want to be productive, take the time to explain all the ways their wrong. You don't have time for that?

Let everyone else at least know it's wrong.

But what you just did was completely pointless

literally do not care about the subject matter or debate whatsoever, I was just pointing out that your behavior is not acceptable

LLMs work by predicting which word comes next based on training data ("the internet") and the model is then tweaked so that it doesn't sound like 4chan. How is any of that incorrect, o wise one?