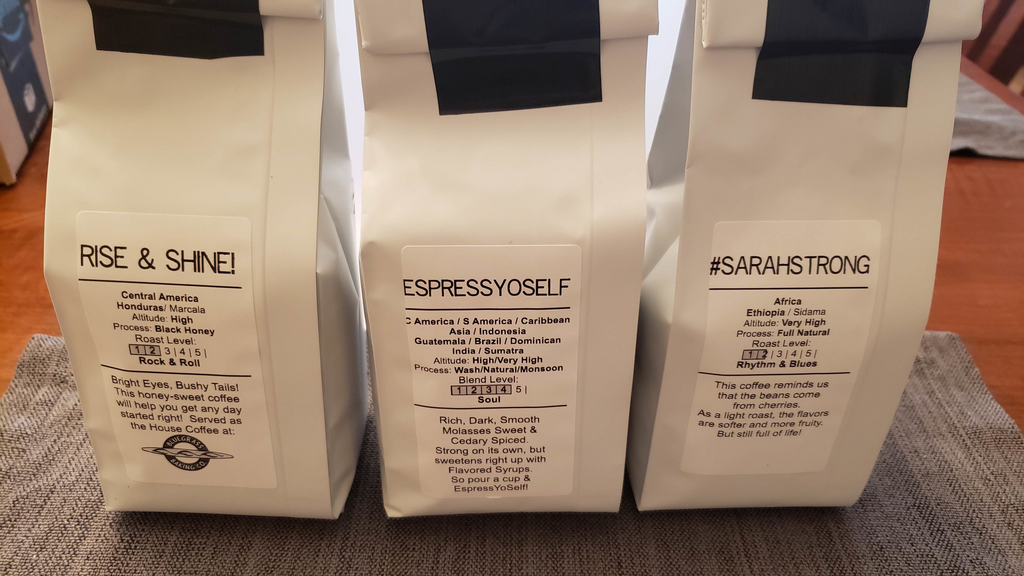

As I continue my palate-developing tour of Lexington, KY's local roasters, three selections from GarageBean'd. I've tasted them as 16:32 ~30s espresso and as 11:200 Hoffman-method Aeropress, fresh ground appropriately for each.

They're super reasonably priced ($10-15/lb) for single-origin small-roaster products, and do bags from 8oz-1lb (and samplers) so you can try stuff, both of which are things I appreciate.

All three I picked are extremely characterful, and at least pretty good. I intentionally picked stuff that would be interesting for palate development rather than specifically to my tastes. I've done the reading for other-than-washed process coffees, but not tried many, and that was a lot of the focus for this round.

EspressYoSelf is a fairly classic modern espresso blend, the bill lists components from Brazil, Guatemala, Dominican Republic, Ethiopia, & India. It also notes a mix of Washed, Dry, Natural and Monsooned components. They roast it barely into medium-dark, just over 5/10 on their scale, which is around my preference for Espresso. It's SUPER complex with lots of molasses and spice notes. It does have a slightly "dirty" finish compared to some other espresso blends from my local tour; I'm not a good enough taster to pick out for sure why, I think it might be the monsooned probably-Indian-robusta component. The body kind of reminds me of stabilized whipped cream: it starts out feeling really substantial and kind of thins in your mouth, which is nifty. Good. Not enough to displace Nates from my #1 spot for local espresso blends, but definitely worth having.

#sarahstrong "Light" (they sell it at two roast levels) is a Natural process from Sidama, Ethopia, roasted light, 1.5/10 on their scale. It's super interesting, but a little funkier than I'm generally in to. Not bad, the body and fruityness are excellent... but there's a lot of that "rotten fruit" kind of fermented flavor that naturals are known to pick up, and it's a little much for me as an everyday coffee. Definitely a fun pick if you want to try a face full of natural process character.

Rise & Shine! is a Black-Honey process from Marcala, Honduras roasted to Medium (4.5/10). It would be high-character coffee in any other company, but it comes off as the most normal here. It's naturally quite sweet up front, with a very prominent dark honey/brown sugar kind of flavor, a bitter note in the middle, and a very clean, classic "nice cup of coffee" finish. It's the house coffee at a local bakery and really suits the role - it always feels like something I should be drinking out of china with a fancy pastry.

I initially didn't do enough of that in my PhD thesis (CS, about some weird non-frame-based imaging tech that is still only of academic interest), and my committee demanded I add more stake-claiming favorable comparisons to other tech to my introduction before I submitted.