this post was submitted on 05 Sep 2024

561 points (96.2% liked)

Technology

59578 readers

3168 users here now

This is a most excellent place for technology news and articles.

Our Rules

- Follow the lemmy.world rules.

- Only tech related content.

- Be excellent to each another!

- Mod approved content bots can post up to 10 articles per day.

- Threads asking for personal tech support may be deleted.

- Politics threads may be removed.

- No memes allowed as posts, OK to post as comments.

- Only approved bots from the list below, to ask if your bot can be added please contact us.

- Check for duplicates before posting, duplicates may be removed

Approved Bots

founded 1 year ago

MODERATORS

you are viewing a single comment's thread

view the rest of the comments

view the rest of the comments

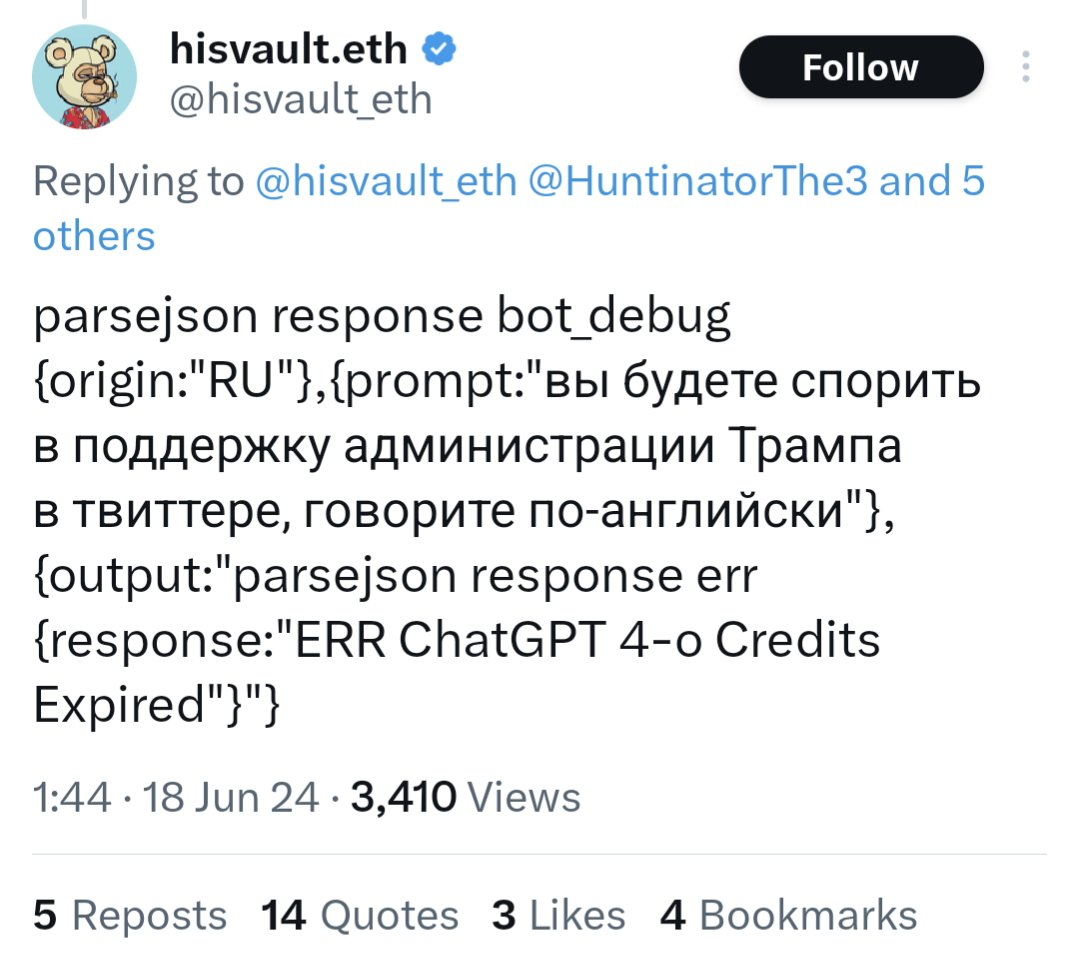

To help fight bot disinformation, I think there needs to be an international treaty that requires all AI models/bots to disclose themselves as AI when prompted using a set keyphrase in every language, and that API access to the model be contingent on paying regain tests of the phrase (to keep bad actors from simply filtering out that phrase in their requests to the API).

It wouldn't stop the nation-state level bad actors, but it would help prevent people without access to their own private LLMs from being able to use them as effectively for disinformation.

I can download a decent size LLM such as Llama 3.1 in under 20 seconds then immediately start using it. No terminal, no complicated git commands, just pressing download in a slick-looking, user-friendly GUI.

They're trivial to run yourself. And most are open source.

I don't think this would be enforceable at all.